Key differences between serverless and containers

The table below shows how serverless and containers compare:

| Feature |

Serverless |

Containers |

| Management overhead |

Minimal |

Moderate |

| Scalability |

Automatic scaling |

Manual or automatic scaling |

| Cold start |

Might have cold start times |

Faster start-up times |

| Resource utilisation |

Optimised for individual function requests |

Depends on the container size |

| Pricing model |

Pay-per-use |

Pay for provisioned resources |

| Flexibility |

Limited control over runtime environment |

Greater control over runtime environment |

Another important thing to consider is that serverless functions run on shared Azure infrastructure, which is secure, but in some industries, shared environments don’t meet compliance needs.

Similarities between Serverless and Containers

Serverless and containers facilitate building applications using a microservices architecture.

Both serverless and containers abstract the underlying infrastructure, freeing developers to focus on writing code rather than managing servers. They are also very scalable. Serverless architectures automatically scale in response to events, and container orchestration platforms like Kubernetes can scale container instances based on demand.

In addition, as well serverless and container-based applications integrate well with DevOps practices, supporting continuous integration and continuous deployment (CI/CD) pipelines.

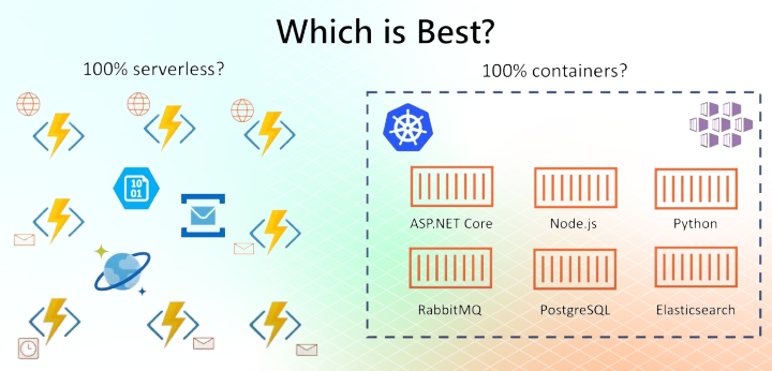

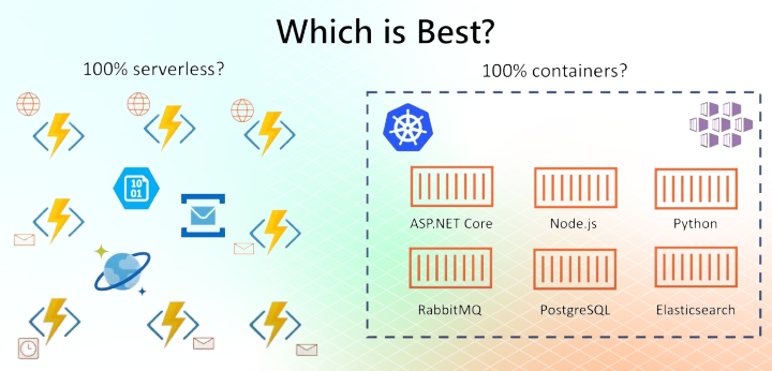

Which one should you choose?

Choosing between serverless and containers is crucial when modernising your application. So, which architecture should you choose?

- You can create a 100% serverless system where every piece of code is a small Azure Function, triggered by events, paired with PaaS services for storage, messaging and more.

- Or, you can go 100% containerised: where you have your Kubernetes cluster, and all of your microservices containerised.

Alongside that, you might even decide to containerise your databases and message queuing systems. Containerising everything results in a much more cross-cloud portable solution.

In other words: you can easily take your microservice application from Azure and run it in AWS or on-prem.

Why go for serverless

Going serverless in Azure, for example, adopting Azure Functions, may be a solid use case in some scenarios.

With serverless, you don’t have to worry about what the file system is doing, nor your environmental dependencies nor you don’t have to set up Docker containers. If you have short-lived or sporadic workloads, serverless means you only pay when code runs.

Generally, they are a good fit for workloads that are event-driven, run briefly and need to scale on demand. But they especially excel if you want to get your business to the market quickly. You’ve got an idea and need to get code into production quickly to start generating revenue – then it works, even when temporarily. You can ship code fast using Azure Functions.

But what if it gains traction? Ultimately, you reach a certain point where serverless will take you no farther.

As long as your business logic sits behind an API controller, it’s usually easy to migrate. You can move it into containers, adopting Kubernetes, leaving serverless behind.

Why go for containers

Although we just mentioned how serverless encourages event-driven apps… that’s not to say you can’t do event-driven, stateless programming with containers. You can.

And there are so many reasons to adopt a container architecture, so let’s break down some of them:

- One of the key advantages of containers is how well they handle legacy app migration. Even older .NET apps like .NET 4 can often be containerised quickly with just a Dockerfile and run on Windows containers.

- In contrary to serverless, containers can often be started in seconds, and once written, they can be run almost everywhere.

- Whereas in serverless you are more tied to vendor lock-in, you aren’t with containers.

- Also, containers are not limited to languages; they can run on any.