Kubernetes or Docker: Which one is right for you?

When to choose Docker

Docker can be great choice for single-container apps or local development environments with tools like Docker Compose. It doesn’t have the orchestration power of Kubernetes, of course, but it’s great for spinning up multi-container environments locally, especially in development.

Docker is mostly involved during the development and testing phase of an application. Examples include:

- Creating consistent development environments.

- When developing a microservice on your local machine, you can run the service and its dependencies like a database or cache in their own containers.

- Also super useful in CI/CD pipelines, where you get consistent builds and testing across different environments.

But it falls short if you want to build scalable, complex, distributed apps. This is because it cannot hold multiple containers spread across many servers.

Example: if you want to run, let’s say, 20000 containers across 500 servers, you need an orchestration platform like Kubernetes.

When to choose Kubernetes

Kubernetes comes into play when you deploy an application to production or other environments.

Kubernetes is super useful when you want to:

- Deploy multiple microservices with high availability

- Manage data stores with strong durability guarantees

- Run big data workloads like Apache Spark

- Or even run something smaller like cron jobs

- Why use Docker and Kubernetes together

In the end, the ultimate question is: “Why use Docker and Kubernetes together?”.

The answer = when you need to manage and scale many containers, Docker alone won’t be sufficient. These two technologies were created to work together:

- Docker runs the containers

- Kubernetes handles the orchestration, ensuring those apps run reliably at scale

Together, they are essential for production environments where automated scaling, failover and management across multiple machines are required.

Now let’s look at a simple workflow of Docker and Kubernetes:

- Developers use Docker to package the application into a container, including all dependencies

- The Docker image is pushed to a container registry

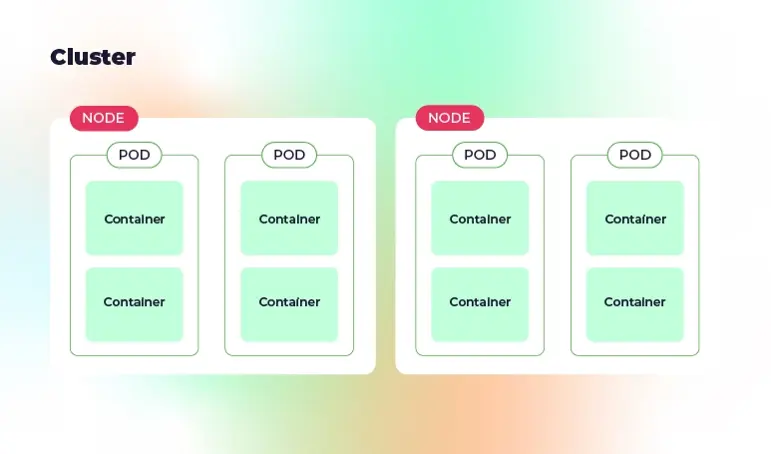

- Kubernetes pulls the image from the registry and deploys it as a pod

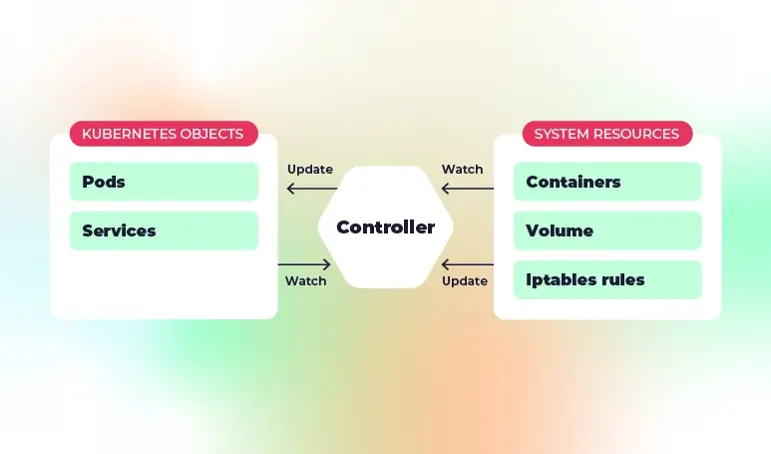

- Then Kubernetes will monitor the traffic and scale the pods horizontally, adding more containers as needed

- It will also ensure the incoming traffic is routed to healthy containers

- And if any container crashes, Kubernetes automatically restarts it to ensure that the desired number of replicas is running

Closing thoughts

We’ve discussed Kubernetes and Docker and how choosing between one or the other is often not necessary since they serve different purposes. Instead of choosing one, they can go hand-in-hand, enabling you to create a powerhouse of infrastructure.

Combining Kubernetes and Docker ensures a well-integrated platform for container deployment, management, and orchestration at scale. Together, they’re the backbone of modern cloud-native applications.

It offers a consistent runtime, so your app runs the same across development, test, and production – regardless of the system or environment.

It offers a consistent runtime, so your app runs the same across development, test, and production – regardless of the system or environment.