After you embraced the container technology and decided that Kubernetes is going to be your weapon of choice, you will have to dive into the nitty and gritty of it. There is a lot to be learned but some key concepts like Services, Pods, Ingresses, and namespaces are vital to your success.

Where do you start?

For ops I always like to draw the comparison with more traditional on prem solutions. Yes, there is more to it, but it does help with the basic understanding.

In most environments’ data / connections flow from the Ingress to a Service and from the Service to the Pods. Sounds complicated? It doesn’t have to be. For simplicity let’s translate this to a more traditional environment.

Ingress – This could be the Router or Firewall you used to manage

Service – Let’s call this your load balancer for high available workloads in the backend

Pods – This run the containers and this your workload.

Namespaces – The room your hardware lives in

Namespaces

Let’s get started with namespaces. I’m assuming you already have your Azure Kubernetes Cluster up and running. By default you will have a default namespace and a kube-system namespace. If you deploy something without targeting a namespace it will deploy to the default namespace.

The Kube-system namespace is used for objects created by the Kubernetes System itself. Basically the stuff you need to keep Kubernetes up and running. Additional services you add (such as monitoring with Azure Monitor, service meshes, ingress controllers) will be deployed into the Kube-system namespace as well.

I’d like to refer to namespaces as the “resource group” (or server room in our analogy) that you deploy your things in.

Just be to very clear: namespaces are not a security boundary. Instead they are a way to logically organize your resources. They do come with limitations and are isolated from each other in terms of functionality. For instance: having a TLS secret available in one namespaces doesn’t automatically make it available in other namespaces.

Then the big question is: when do I use a new namespace? And as for most things, the answer is: it depends.

It depends on what your solution looks like, your update scenario and the technologies used.

In general, you could say that everything with the same life cycle goes into a namespace. Let’s say you have a team working with a set of 5 microservices and another team working with another set of 5 microservices. To keep things logically separated, they could work in two different namespaces. And yes, services in different namespaces can communicate with each other if you let them, by default however, they don’t.

What you generally wouldn’t use namespaces for is to deploy the next version of your solution. You can simply deploy your new version to the same namespace, use the resources that are already there (volume claims, secrets, etc.) without having to recreate them in a new namespace. A way to separate them from a management perspective (and routing but we’ll get to that with services) would be to use labels. There’s no need to create a new namespace for this 😊

To sum up: namespaces are a great way to organize your resources however you see fit. But make sure you don’t end up with hundreds of namespaces because that will have to opposite effect.

Ingress

This is where things get complicated. The Ingres can be seen as your first point of entry to your solution (your router). The concept of Ingress consists of two parts: The Ingress Controller and the Ingress Rules. Let’s first look at the Controller. This pretty much determines the functionalities you will have. In our analogy we call this your Router Hardware.

The big question is: what functionalities do you need? Do you need TLS termination? Do you need a service mesh, or do you use a specific technology for traffic routing? To determine what controller works best for you, there is a great document out there that will show the pro’s and cons of each controller (Kubernetes Ingress Controllers - Google Spreadsheets).

Some popular used controllers we come across with our customers are: Nginx, Istio and Azure Application Gateway. But to each there own; look for the Ingress controller that best fits your needs!

Once you have the controller set up you can create the Ingres rules. These are separate resources in Kubernetes and can be compared with traditional routing/firewall rules.

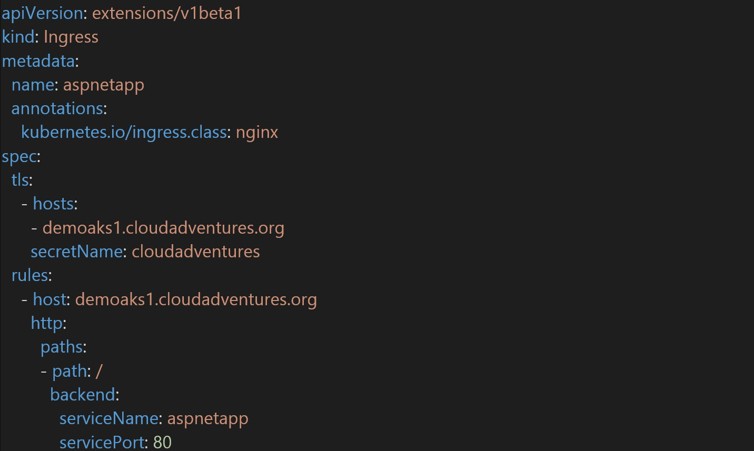

Let’s look at the following example. An Ingress rule for an Nginx Ingress Controller is created, it performs TLS termination for the domain “demoaks1.cloudadventures.org” and uses the “cloud adventures” secret which contains the certificates for that domain. It then sends all traffic to the service “aspnetapp”. More on that service later.

Example of an Ingress Rule

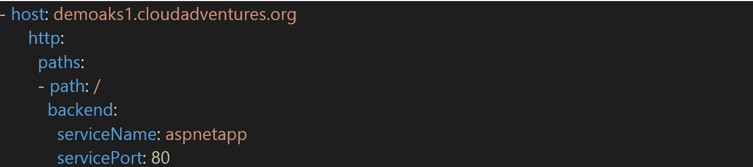

Adding a new rule or domain simply requires you to add another rule similar to:

It’s already starting to become a bit less complicated isn’t it?

Services

We just briefly mentioned Services. Services can be seen as Load Balancers if you will. It will be the dependable endpoint to your pods. In the previous example of Ingress Rules we saw that the rule doesn’t point to one specific Pod but to a Service instead. The service acts like some sort of load balancer between the outside world and the Pods. A Service has a private IP address and can have a public IP address as well. However, it’s generally advised that you don’t provide your Service with a public IP Address but disclose it using an Ingress Rule instead.

The Service is looking for a specific label on the Pods to determine whether it’s part of the workload you want to send traffic to. If the labels match, the Service will basically load balance and direct traffic to the available Pod(s).

In the following example we have the YAML definition of a Service. The service is called “aspnetapp” and in this example the Pods it will load balance for have a label with “App: aspnetapp”.

This means that no matter how many replicas (copies) of your workload you spin up, the Service will be able to see them and direct traffic towards them.

Pods

Last but not least we have Pods. Pods are the smallest entity that you can manage on Kubernetes. A Pod can contain one or more containers. Eventually, upon deployment a Pod will be scheduled to run on a node. If your workload consists of multiple Pods, keep in mind that these pods can all run on different nodes (virtual machines). This is where the choice of how many containers you are going to run in a single pod becomes important. Generally, you would run 1 container per pod. But in tightly-coupled scenarios where 2 containers are really dependent on each other you could configure both those containers in a single pod, making sure they always run on the same node and are as close together as possible.

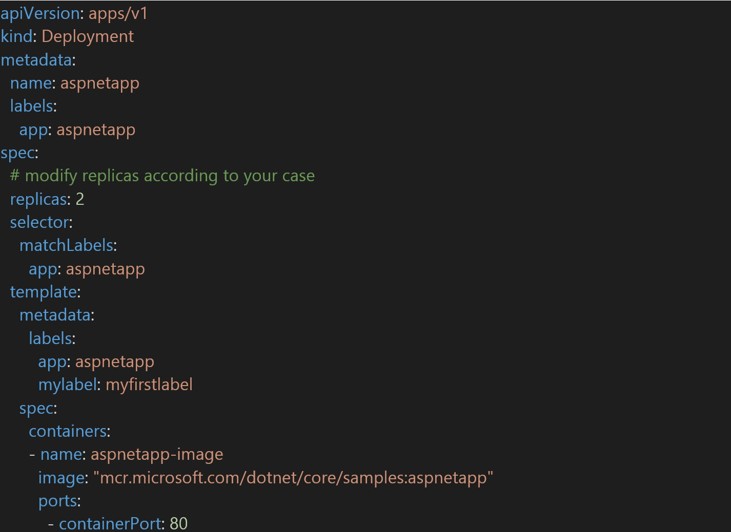

As Services look for a label to match, Pods need to have a label corresponding with that value. In the following example we have a Pod that contains a single container (aspnetapp-image) and is labeled with “app: aspnetapp”. This finalizes the configuration of Ingress to Service to Pod.

Now if we would increases the amount of pods (because we need to scale our solution) we would up the “replicas” and more pods will be created. We can do this without having to reconfigure our service as the Pod definition below will remain, the only thing that will change is the amount of pods. As long as you are careful with your labels scaling will no longer be a challenge!

Summary

In this example of Ingresses, Services and Pods we have the following scenario:

The Ingress Controller accepts the connections, and the Ingress Rules provide the certificate and deal with TLS termination. The Ingress Rule makes sure that the traffic for the URI is sent to the correct Service and that Service uses labels to direct the traffic to the correct pods.

For an update scenario this would mean you could deploy a completely new version of your application, create a new service and once you are happy with the result, change the Ingress Rule to point to your new service and thus new Pods. Pretty cool right?

Of course there is much more that you can do but understanding these concepts will definitely help you get started with your first deployments.

This article is part of a series

Read all about Monitoring and Managing of AKS clusters in this follow-up article.

Read back previous articles? Click here:

1. The evolution of AKS

2. Hybride deployments with Kubernetes

3. Microservices on AKS

4. Update scenario's AKS

5. Linux vs. Windows containers

6. Security on AKS

Sign up here for our Intercept Insights and we’ll keep you updated with the latest articles.