The basics: containers

I believe the evolution to AKS starts with containers. Even though you don’t recognize containers at first, they are applied everywhere. The app on your phone for example is packaged as a container as well. There are many kinds of containers, but Docker containers have become the standard for Linux applications.

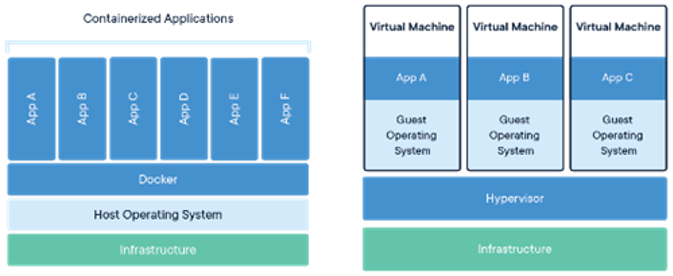

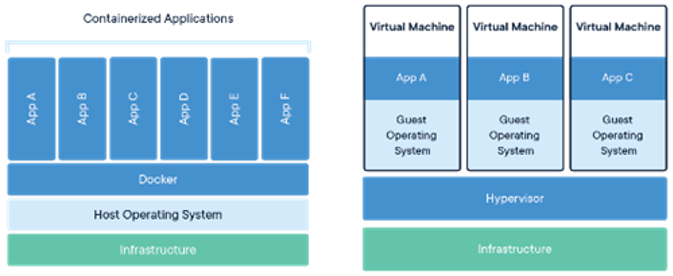

A container is a reliable way to package and run applications in multiple environments (laptop, Microsoft Azure, private datacentre or Amazon). This is because a container isn’t depending on the platform where it is deployed. To function properly the container contains the application including all the necessary runtime and dictionaries. It doesn’t matter if the container runs on system A or B: the contents remain the same and all dependencies will be provided. For a representation see the image below.

Containers are more efficient because they share the same operating system (OS) and resources with other containers. In contrary to VMs, which have their own OS, containers can often be started in seconds, while a VM often needs minutes to start. Containers are also more interesting in terms of costs, because all containers share the same resources.

Source

The use of containers offers many more benefits. If you want to know more about containers, please feel free to read the article ‘write once, run anywhere’, written by Wesley Haakman.

Microservices and Containers go hand in hand

Microservices and containers fit extremely well together. In a Microservice architecture, an application is split into multiple smaller services. Each service performs a specific task, nothing more. Microservices are developed independently and a unique development environment can be chosen for each service. For example, service A could make use of .net core, service B of php and service C of node.js.

It would be very inefficient to start a separate VM for each service. Containers offer an excellent solution to avoid this. By packaging each service in a container all containers can use the same hardware. After all, a container does not only contain the application to function properly, it also contains all the necessary runtime and libraries as well. The results of using the same hardware for multiple containers will give you a large cost-benefit.

Kubernetes is THE solution for managing containers

Kubernetes is the solution for the efficient management of larger numbers of containers. Kubernetes combines all VM’s or servers (nodes) into one cluster. This cluster can be managed centrally, by a system administrator or by the development teams themselves.

The main advantages of Kubernetes are:

- Efficiency

Kubernetes merges containers in "Pods" so that the most management problems will be solved. You assign properties to Pods such as storage, networking and scheduling. Kubernetes will then look at the available memory and processor usage of each node and will choose the best distribution for you. All nodes within the Kubernetes cluster will be optimally deployed. Eventually resulting indirect cost savings.

- High-availability through Desired State principe

Kubernetes is applied according to the Desired State principle. You tell Kubernetes what you want, not how Kubernetes should run it. Kubernetes continuously does its utmost to meet your needs. So in the event of an update, maintenance or failure, Kubernetes will recreate or redistribute the pods to meet your requirements. it automatically ensures that the site or application is always available.

- Update without downtime

By using rolling updates you can always update without downtime. Rolling updates is a technique for replacing pods one by one with a newer version of Kubernetes. In many cases, it is no longer necessary to update in the evening or at night. You can also update more often because the updating can be automated in Kubernetes. Bugs are solved faster and customers get the features they ask for faster.

- Central Management

You manage your Kubernetes in one place. Kubernetes has its own storage for configurations, passwords and certificates. No local configuration is needed on VM or server level. This makes the management of a Kubernetes cluster efficient, easy and clear. There is also a huge number of tools and plugins available for managing Kubernetes. You are not obliged to use these tools, but it is nice to keep in mind that Kubernetes can grow endlessly with your organization.

Manage your Kubernetes with Azure Kubernetes Service

Setting up a Kubernetes cluster manually is a serious job and requires extensive knowledge and experience of Linux, Docker and Kubernetes. Even if you are successful in setting up a Kubernetes cluster, you still have to manage it. You need to plan how to deal with malfunctions, updates and extensions. If you are going to do all this all by yourself, this will take a lot of time and it will be quite expensive. So how can you manage kubernetes clusters properly?

Azure Kubernetes Service

Azure Kubernetes Service (AKS) is managed Kubernetes in Azure. Microsoft takes over all your complex cluster management with AKS. With a few clicks or a few code lines you have an AKS cluster in production and you can manage your containers easily.

Microsoft is one of the largest participating organizations that actively develops on Kubernetes. This results in perfect support for Kubernetes in Azure. After a new version is released the version is available in Azure within two weeks. Updating a cluster, normally a very complicated job, has been reduced to a single click with AKS.

The reasons why you will love AKS even more:

- It is Free! AKS is a free service. You only pay for the resources (VMs, storage and networking) your cluster uses. No additional costs are charged for the AKS PaaS service itself. Basically, the complex management of the cluster is free of charge.

- Each Kubernetes cluster needs one or more master nodes. These VMs contain the configuration and take responsibility for the management of the cluster. The master nodes are at the expense of Microsoft, you only pay for the VMs or workloads your AKS-cluster consists.

- AKS offers excellent integration with Azure DevOps for setting up CD / CI pipelines. For CI en CD pipelines it is most important that updates are deployed to production systems at any time of the day. With rolling updates, you can update the containers one by one without any downtime.

- The AKS clusters in Azure run on an Availability set. In this way the servers of a cluster are distributed to several physically separated hardware racks. This guarantees that the cluster itself stays online during a failure of underlying hardware. As a result, an AKS cluster and is always available and therefore your application as well.

- A cluster is almost endlessly scalable horizontally, adding or removing VMs can be done without downtime or even fully automatically. If you ever need any extra power, VMs are added to the cluster in just a few clicks.

- Last, but not least. With Azure Kubernetes Service you have unmatched integrations with other services within Azure. For example, the integration with storage, networking, monitoring and security is flawlessly integrated within Kubernetes.

This article is part of a series

In the follouw-up article, read what you need to set up a Kubernetes cluster and how to put it into practice step by step.

Sign up here for our Intercept Insights and we’ll keep you updated with the latest articles.