You use a load balancer to distribute traffic evenly across these VMs. A scale set is always placed within a subnet and a virtual network (Vnet).

- The load balancer manages incoming traffic to these virtual machines. These VMs are then grouped into a backend pool, which is basically a group of VMs that will handle your incoming requests.

- The load balancer is automatically updated as the scale set scales out (when you add VMS). This keeps your app responsive and available, without manual intervention.

- By using a backend pool, your application no longer depends on a single virtual machine to handle traffic. Instead, a group of virtual machines share the load, distributing traffic across them.

- If one VM fails, it’s automatically removed from the pool, and requests are rerouted to the healthy instances.

This setup prevents outages and keeps your application available to users without interruption.

Health probe

A health probe is a continuous keepalive message sent to detect whether a VM is healthy to send traffic to. If the VM stops responding to Health Probes, it is considered failed and removed from the backend pool, not sending any further traffic.

There are two Health Probe types supported within Azure Load Balancer:

- TCP Health Probe

- HTTP & HTTPS Health Probe

TCP Health Probe

A TCP message is sent to the VMs in the backend pool on a specified TCP port. A TCP session is established if a VM actively listens on that port, and the probe succeeds. If the connection is refused, the probe fails. After several failed probes, the VM is marked as unhealthy and removed from the backend pool.

HTTP & HTTPS Health Probe

An HTTP probe sends a request to the VMs in the backend pool. If the response is HTTP 200, the VM is marked as healthy. Any other response causes the probe to fail. After several failures, the VM is considered unhealthy and is removed from the backend pool.

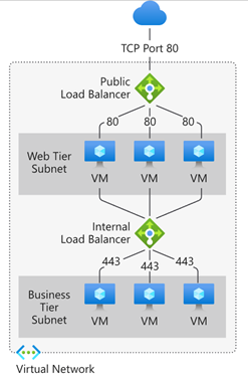

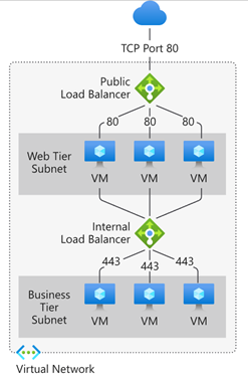

Azure Load Balancer Deployment Topologies

When a back-end pool is created, you can choose two deployment topologies:

- A public load balancer

- An internal load balancer

So, what are they exactly, and what is the difference between internal and external load balancers in Azure?

Public load balancer

The public load balancer uses a public-facing IP address as its frontend. You can choose to expose a load balancer to the public internet.

It routes internet traffic to the backend virtual machines inside your Azure virtual network. Essentially, it maps public IPs and TCP ports to private IPs of VMs, allowing users and apps outside Azure to access services running on those VMs.

This setup is common for public-facing applications (websites or APIs).

Internal Load Balancer

On the contrary, an internal load balancer doesn’t expose a public IP but instead uses a private IP. It operates entirely within your Azure virtual network, meaning it can only be accessed by internal resources – like VMs in the same network or on-premises systems connected through a VPN.

It's typically used to route traffic between internal tiers, such as web servers and database servers, for private backend communication.

If you're running a hybrid cloud setup, you can configure the Azure Load Balancer frontend to be reachable from your on-premises network.

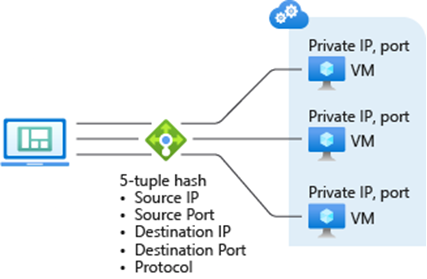

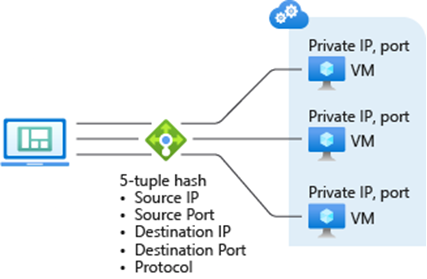

What is session persistence in Azure Load Balancer?

Session persistence in Azure Load Balancer ensures client requests are consistently routed to specific backend instances. This is especially helpful when maintaining stateful apps where client sessions must be maintained across multiple requests.

Put simply, persistence sessions make sure that a client will always be served by the same virtual machine.

By default, Azure load balancer distributes traffic equally between your virtual machines based on a hash calculation called 5-tuple hash, which uses:

- The source IP of the traffic

- Destination IP

- Source port

- Destination port

- Protocol

For example:

- If the first request comes from machine one, the hash might send it to virtual machine one.

- If another request comes from machine two, with different source details, the hash could send it to virtual machine two.

This kind of distribution works fine when it doesn’t matter which virtual machine handles each request.

However, in some scenarios, you need the same VM to handle all traffic from a specific user – session persistence.

A common example is a shopping cart on an e-commerce site.

Example E-commerce site

If a customer adds items to their cart, that state is often held in memory on a specific virtual machine. If future requests from that customer go to a different VM, the cart could appear empty or be lost entirely. The same applies to situations like file uploads or remote desktop sessions. These require all traffic to stick with one virtual machine to avoid breaking the session or disrupting performance.

To do so, we would use what is called Azure Load Balancer Distribution Modes.

Azure Load Balancer Distribution Modes

Distribution Modes let you control how traffic is distributed and make it possible to keep a user tied to a specific virtual machine during a session.

Within Azure Load Balancer, we can find 3 distribution modes:

- None: Load Balancer would send client traffic to any VM in the Backend Pool based on a 5-Tuple hash.

- Client IP: Traffic from the same client IP address is always forwarded to the same VM.

- Client IP and protocol: Traffic from the same client IP address and the same protocol is always forwarded to the same VM.

None

The distribution mode by default is "None". It basically means the Azure network load balancer would use all five tuples — source IP, destination IP, etc — to send traffic toward whatever machine based on the hash calculation.

Client IP

The second mode is Client IP. In this case, the load balancer only uses the client’s IP address to decide where to send the traffic. That means all traffic from the same IP, regardless of protocol or port, goes to the same virtual machine. If one user opens multiple connections or sessions, one VM consistently handles everything. But a second user, with a different IP, could be routed to another VM.

Client IP and Protocol

The third option, Client IP and Protocol, is useful when the same user uses different protocols to access your application.

For example, they might browse your site over HTTP but upload FTP files. With this mode, traffic is separated by both the client IP and the protocol in use.

So, HTTP traffic might always go to one VM, while FTP traffic could be routed to a different one. This offers more flexibility when the same client uses multiple types of traffic that don’t need to share session state.

Azure Basic vs Standard: What are the differences?

So, what’s the difference between the Azure Basic Load Balancer and the Standard Load Balancer?

The table below breaks it down:

| Metric |

Standard |

Basic |

| Backend pool endpoints |

Any VM |

VMs in Availability Set or Scale Set |

| Health probes |

TCP, HTTP, HTTPS |

TCP, HTTP |

| Availability Zones |

Yes |

No |

| Diagnostics |

Rich metrics in Azure Monitor |

Basic metrics in Azure Log Analytics (public LB only) |

| HA ports |

Yes |

No |

| Secure by default |

Yes |

No |

Azure Load Balancer vs other Azure Services

So, you’re probably wondering: “Which load balancer is best in Azure?”. The answer is it depends on your scenario.

- Azure Load Balancer is built for regional deployments, meaning it distributes traffic across virtual machines within the same region. It’s best suited for non-web traffic, like TCP or UDP workloads, rather than HTTP/S.

- For web applications, especially those needing layer 7 routing features like SSL termination or path-based routing, Application Gateway is a better fit.

- If your deployment spans multiple regions, you must combine Load Balancer with Traffic Manager to manage DNS-based global distribution.

Think of these services as modular tools – stack them together to build the right traffic routing strategy across regional and global scopes.

The table below shows you when to use which service:

| Service |

Global/regional |

Recommended traffic |

| Azure Front Door |

Global |

HTTP(s) |

| Traffic Manager |

Global |

non-HTTP(s) |

| Application Gateway |

Regional |

HTTP(s) |

| Azure Load Balancer |

Regional |

non-HTTP(s) |

Azure Load Balancer Pricing: How much does it cost?

With Azure Load Balancer, you only pay for what you use. The table below breaks down how much Azure Load Balancer costs if you’re based in West Europe:

Standard Load Balancer Pricing Table

| Standard Load Balancer |

Regional Tier Price |

Global Tier Price |

Standard Load Balancer |

| First 5 rules |

$0.025/hour |

$0.025/hour |

First 5 rules |

| Additional rules |

$0.01/rule/hour |

$0.01/rule/hour |

Additional rules |

| Inbound NAT rules |

Free |

Free |

Inbound NAT rules |

| Data processed (GB) |

$0.005 per GB |

No additional charge* |

Data processed (GB) |

Gateway Load Balancer Pricing Table

| Gateway Load Balancer |

Price |

| Gateway hour |

$0.013/hour |

| Chain hour |

$0.01/hour |

| Data processed (GB) |

$0.004 per GB |